This blog is no longer on AWS

I drank the AWS Kool-Aid and set everything up over a weekend. It was exciting and motivating. Hell, it was even stable when I was done.

But for this specific use case, AWS is too complex.

Complex is great when you have complex requirements, but it can also increase your attack surface if you’re not careful.

yOu’Re NoT a ReAl PrOfEsSiOnAl If YoU dOn’T sEt eVeRyThInG uP mAnUaLLy.

While I agree that there’s a certain pride when it comes to building infrastructure, production will always beat perfect.

I would rather spend time improving my writing over perfecting and babysitting infrastructure.

I want to be clear on this: AWS is awesome.

They provide great services, but for my blog, it was just too much.

More on this at the bottom of the post, but the best way to summarize my reasoning is the age-old tip for making great PowerPoint presentations:

The setup

Before I get into why I made this decision, let’s talk about the environment setup.

- Committing a new blog post to GitHub triggers

- CodePipeline, which grabs the code and kicks off

- CodeBuild to build the artifacts, and

- Deploy to S3.

- Cherry on top is that the S3 bucket is CDN fronted by CloudFront

Not complex, but not simple either.

Getting code into the AWS ecosystem

I created a project in AWS CodeBuild using AWS Code Pipeline as a source provider.

The “source” within AWS Code Pipeline links to my GitHub repo.

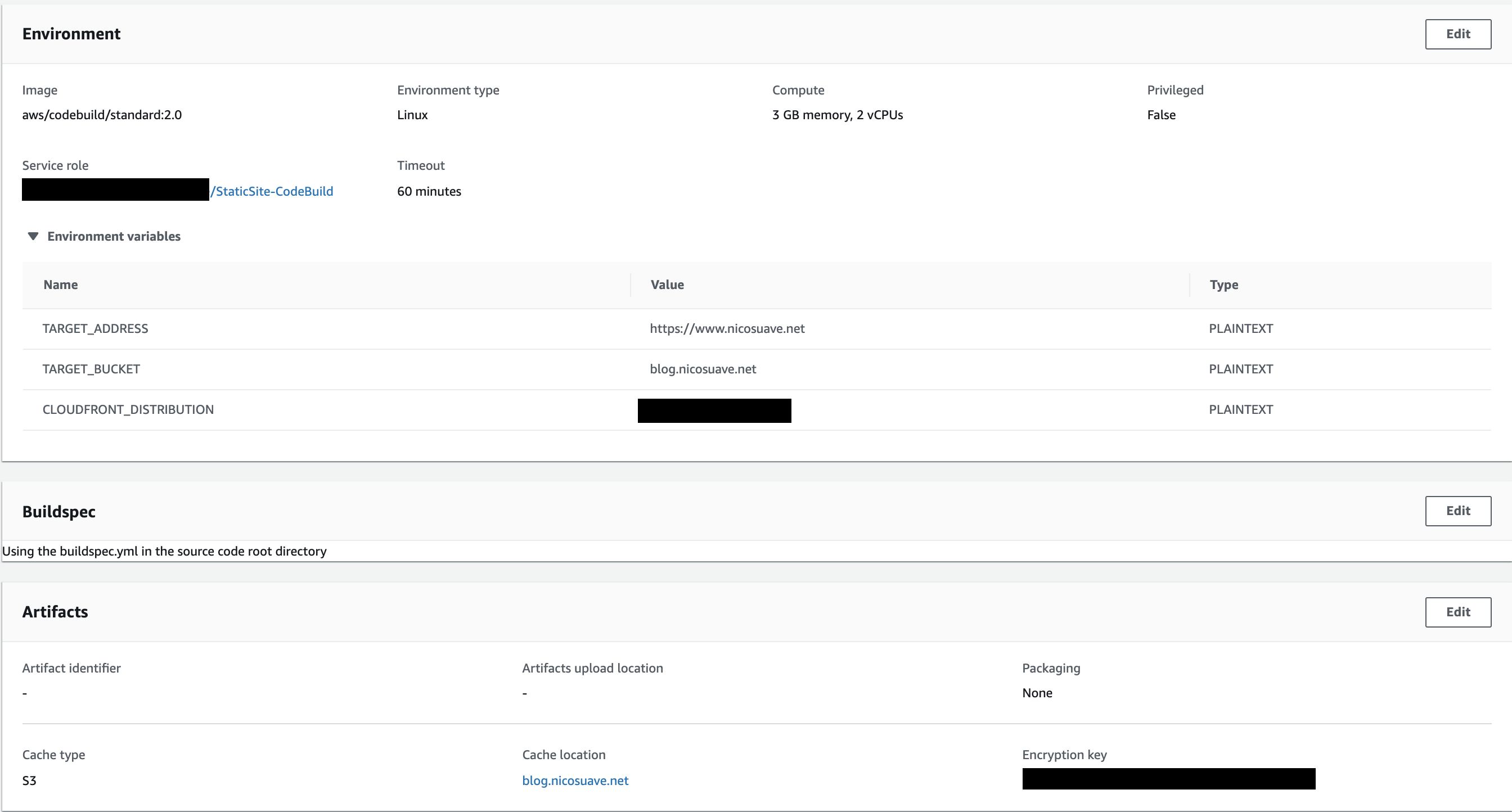

CodeBuild is configured with the following env variables:

buildspec.yml:

version: 0.2

phases:

install:

runtime-versions:

nodejs: 10

commands:

- "touch .npmignore"

- "npm install -g gatsby"

pre_build:

commands:

- "yarn install"

build:

commands:

- "gatsby build"

post_build:

commands:

- "yarn deploy"

artifacts:

base-directory: public

files:

- "**/*"

discard-paths: no

cache:

paths:

- ".cache/*"

- "public/*"

Fairly standard stuff when it comes to Gatsby, so no surprises there.

Deployment Infrastructure

Notice the specific Static Site service role I created for CodeBuild.

It contained two policies, one for CloudFront and another for S3

CloudFront:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": [

"cloudfront:GetInvalidation",

"cloudfront:CreateInvalidation"

],

"Resource": "*"

}

]

}

S3 Policy:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": [

"s3:PutObject",

"s3:PutBucketWebsite",

"s3:ListBucket",

"s3:DeleteObject",

"s3:GetBucketLocation"

],

"Resource": "arn:aws:s3:::*"

}

]

}

That means that the CodeBuild role can only write to S3 and CloudWatch.

(Yes, I could have specified a specific CF Distribution/S3 bucket for this role, but I wasn’t sure if I wanted to create a new role for each ’Static Site’ project OR have one ‘Static Site’ role to use for all projects of that type.)

Now onto S3, the place where all of the artifacts will be stored. Here’s the config:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "PublicReadGetObject",

"Effect": "Allow",

"Principal": "*",

"Action": "s3:GetObject",

"Resource": "arn:aws:s3:::blog.nicosuave.net/*"

}

]

}

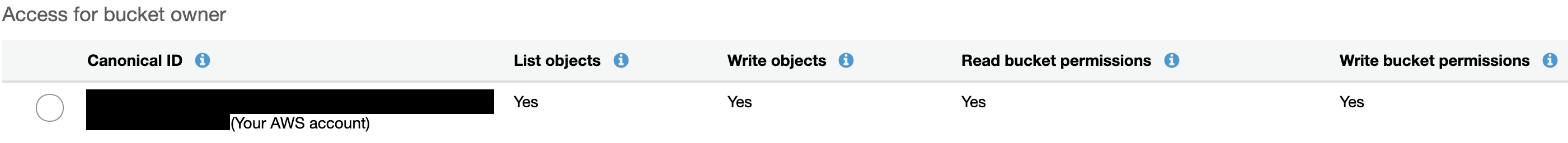

And here’s the S3 Bucket ACL:

Everyone can read from the bucket and only the CodeBuild user could write to the Bucket.

There was no S3 policy set for creating, updating, or deleting objects in the S3 bucket, so it is implicitly denied.

After that was set up, I wanted to get the blog behind a CDN.

Pretty standard CloudFront config. It simply points to the S3 bucket.

It was also simple on the Google DNS side. Set up a subdomain forward and a custom resource for ‘www’ to point to CloudFront.

Voila, manually configured CI/CD blog hosted on a CDN!

Now that’s it’s all setup and CI/CD’d, why do I want to take it down?

Two reasons:

- Complexity

- Risk

This blog is intended to be a read-only static site.

If those requirements ever change, I may re-consider using AWS since there are SO many more knobs to turn to for more complex sites.

Can you imagine how glorious it would be to have a Single Page App hosted on S3 that makes backend calls through API Gateway to talk to a Lambda function that leverages GraphQL to interact with DynamoDB?

Until I have those requirements, this blog will be focused on improving my writing.

Why risk it if there is no biscuit?

One morning, I woke up to the following email:

We’re writing to notify you that your AWS account [redacted] has one or more S3 buckets that allow read or write access from any user on the Internet. By default, S3 buckets allow only the account owner to access the contents of a bucket; however, customers can configure S3 buckets to permit public access.

Unless you have a specific reason (such as hosting a public website) for this configuration, we recommend that you update your bucket and restrict public access.

Blogs are intended to have public read, otherwise how would anyone read it? Thanks for the lookout Amazon. 👊

At any rate, it was fairly alarming. I was immediately running through my configs to see if I accidentally enabled anyone to write, update, or delete objects in the bucket.

After reviewing the configs, this bucket wasn’t at risk, but it was certainly a wake up call. Especially as a security professional, that email spooked me.

How could an attacker leverage write-access to an S3 bucket?

- Deface my blog. Change content, upload contradictory content, or delete content.

- Hurt my wallet by uploading a bunch of stuff and making me pay for a bunch of storage.

- Upload a redirect page to a Command-and-Control. If my blog was a “trusted” site, an attacker could have used it as a hop to their C2 infrastructure, effectively bypassing domain-based reputation controls.

The risk of any of those events happening is not worth being complacent and leaving an opportunity out there for attackers. I don’t babysit this infrastructure everyday, nor do I plan on it.

If AWS made updates to their platform and my S3 bucket suddenly had public CRUD access, my time to detect/respond/remediate would be pretty slow.

The effort to do the what I think is the right thing and minimize risk is absolutely worth it.

So where is this blog hosted now?

Netlify. 1-click deployment to their CDN and minimal configuration management.

With a 1-click deployment model, I don’t have to keep tabs on all the infrastructure and IAM intricacies that made CI/CD possible in a secure way.

A large issue in security is misconfiguration. With cloud security being so nebulous, I’m glad I took the time to do it correctly.

If this blog gets pwned, I’m probably not the only one vulnerable. Netlify would hopefully take notice and resolve the issue much faster than I would.

By the way, AWS offers a similar 1-click service called AWS Amplify, which I actually have my portfolio website on. Comparison post coming soon!